Process vs Thread - The Foundation Every Backend Engineer Must Know

Series: Backend Engineering Mastery

Reading Time: 12 minutes

Level: Junior to Senior Engineers

The One Thing to Remember

Process = house, Thread = room in the house.

But here's why you care: Ever had your entire Python app freeze because one request got stuck? Or watched your server crash when one user's code had a bug? That's the difference between processes and threads in action.

Threads share memory within a process; processes don't share memory with each other. This simple distinction drives almost every concurrency decision you'll make—and explains most of the "weird" bugs you've seen.

Why This Matters for Your Career

Every performance bug, every scaling decision, every "why is this slow?" investigation eventually comes back to understanding processes and threads.

I've seen senior engineers spend 10 hours debugging a race condition that would have been obvious if they understood threads. I've watched teams choose the wrong architecture because they didn't know when to use processes vs threads.

This isn't academic knowledge—it's the difference between:

-

Debugging a race condition in 10 minutes vs 10 hours

- Knowing threads share memory = you immediately check for synchronization bugs

- Not knowing = you blame the database, the network, everything except the actual problem

-

Choosing the right architecture for a 10K concurrent users system

- Understanding isolation vs efficiency = you pick the right pattern

- Not understanding = you guess, and production breaks at 2AM

-

Understanding why your Python app doesn't scale with threads (GIL)

- Knowing GIL exists = you use multiprocessing for CPU work

- Not knowing = you add more threads, nothing improves, you're confused

The good news: Once you internalize this, you'll see it everywhere. Every production issue, every design decision, every interview question—it all comes back to this foundation.

Quick Win: Check Your Current Setup

Before we dive deeper, let's see what you're actually running:

# See how many processes your app uses

ps aux | grep your-app-name | wc -l

# See how many threads per process

ps -eLf | grep your-app-name | awk '{print $2}' | sort | uniq -c

# If you see 1 process with 50 threads → thread-based

# If you see 10 processes with 5 threads each → hybrid (common!)

What to look for:

- 1 process, many threads: Thread-based (good for I/O, watch for race conditions)

- Many processes, few threads each: Hybrid (most production systems)

- Many processes, 1 thread each: Process-based (maximum isolation)

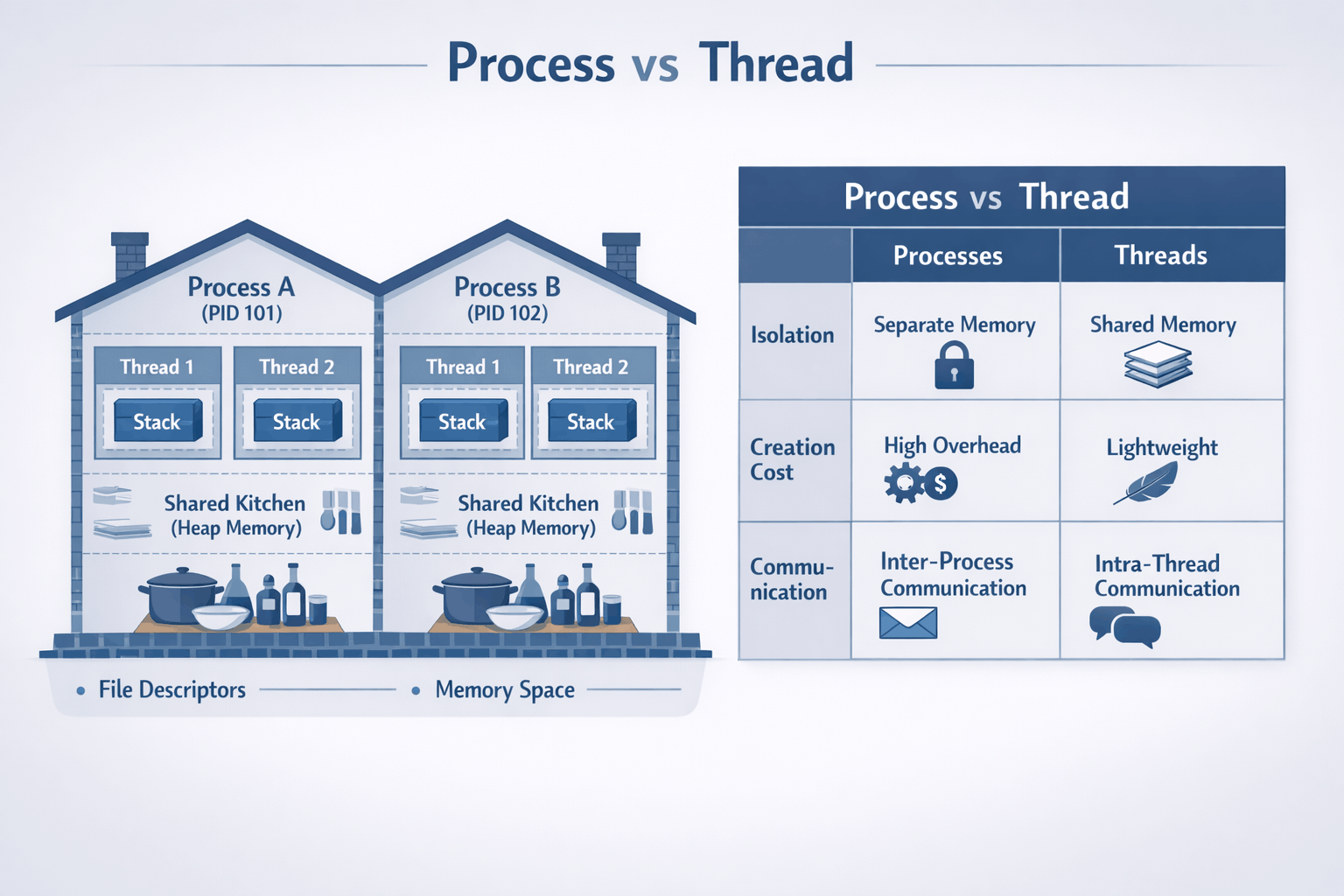

The Mental Model

Processes: Isolated Houses

Think of a process as a house:

- Has its own address (memory space) - neighbors can't see inside

- Has its own resources (file handles, network connections) - separate utilities

- Walls separate it from neighbors - fire in one house doesn't spread

- If your neighbor's house catches fire, yours is protected

Real example: When Chrome runs each tab in a separate process, one malicious website can't access another tab's data. The "walls" (process isolation) protect you.

Threads: Rooms in a House

A thread is a room within that house:

- All rooms share the same address - same street number

- Share the kitchen (heap memory) and front door (I/O resources) - common spaces

- People in different rooms can easily communicate by shouting - fast communication

- But they can also accidentally mess up shared spaces - race conditions!

Real example: A web server with multiple threads can share a connection pool (the "kitchen") but if two threads try to grab the same connection at once without coordination, chaos ensues.

Visual Model

┌─────────────────────────────────────────────────────────────────────┐

│ OPERATING SYSTEM │

│ (The neighborhood manager) │

├───────────────────────────────┬─────────────────────────────────────┤

│ PROCESS A │ PROCESS B │

│ (House #1234) │ (House #5678) │

│ ┌───────────────────────┐ │ ┌───────────────────────┐ │

│ │ Address Space │ │ │ Address Space │ │

│ │ ┌─────────────────┐ │ │ │ ┌─────────────────┐ │ │

│ │ │ HEAP │ │ │ │ │ HEAP │ │ │

│ │ │ (shared by │ │ │ │ │ (shared by │ │ │

│ │ │ threads) │ │ │ │ │ threads) │ │ │

│ │ │ "The Kitchen" │ │ │ │ │ "The Kitchen" │ │ │

│ │ └─────────────────┘ │ │ │ └─────────────────┘ │ │

│ │ ┌──────┐ ┌──────┐ │ │ │ ┌──────┐ │ │

│ │ │Stack │ │Stack │ │ │ │ │Stack │ │ │

│ │ │ T1 │ │ T2 │ │ │ │ │ T1 │ │ │

│ │ │Room1 │ │Room2 │ │ │ │ │Room1 │ │ │

│ │ └──────┘ └──────┘ │ │ │ └──────┘ │ │

│ └───────────────────────┘ │ └───────────────────────┘ │

│ │ │

│ File Descriptors: 3,4,5 │ File Descriptors: 3,4 │

│ PID: 1234 │ PID: 5678 │

│ (Can't see Process B!) │ (Can't see Process A!) │

└───────────────────────────────┴─────────────────────────────────────┘

Key insight: Process A's threads can't see Process B's memory at all.

But Thread 1 and Thread 2 in Process A share the same heap (kitchen).

The Comparison Table

| Aspect | Process | Thread |

|---|---|---|

| Memory | Isolated address space | Shared address space |

| Creation cost | Heavy (~10ms, fork syscall) | Light (~1ms) |

| Communication | IPC (pipes, sockets, shared memory) | Direct memory access |

| Crash impact | Isolated (one crash doesn't affect others) | Takes down entire process |

| Context switch | Expensive (TLB flush, cache invalidation) | Cheaper (no TLB flush) |

| Debugging | Easier (isolated state) | Harder (shared state, race conditions) |

| Memory overhead | High (~10-50MB per process base) | Low (~1MB per thread stack) |

Quick Jargon Buster

- IPC (Inter-Process Communication): How processes talk to each other. Like sending mail between houses—pipes, sockets, or shared memory segments.

- TLB (Translation Lookaside Buffer): CPU's "address book" for memory locations. When switching processes, this cache gets flushed, causing expensive lookups.

- Context Switch: OS switching from one thread/process to another. Like changing tasks—saves current state, loads new state.

- GIL (Global Interpreter Lock): Python's rule that only one thread can run Python code at a time. Threads can do I/O in parallel, but CPU work is serialized.

Trade-offs: The Decision Framework

When to Use Processes

✅ Choose processes when:

-

Isolation is critical

- One component crashing shouldn't bring down others

- Example: Chrome uses process-per-tab so one bad tab doesn't crash the browser

-

Security boundaries matter

- Different trust levels (user code vs system code)

- Multi-tenant systems where tenants shouldn't see each other's data

-

You need to scale beyond one machine

- Processes are the natural unit for distributed systems

- Each server runs a process; easier to reason about

-

CPU-bound work in Python/Ruby

- GIL (Global Interpreter Lock): Python's "one thread at a time" rule for CPU work

- Threads can't actually run Python code in parallel (they can for I/O though!)

multiprocessinggives you actual parallel execution by using separate processes- Why this matters: If you're doing heavy computation in Python, threads won't help—you need processes

Trade-off cost: Higher memory usage, slower communication between components

When to Use Threads

✅ Choose threads when:

-

Shared state is natural

- In-memory caches that all handlers need

- Connection pools shared across requests

-

I/O-bound workloads

- While one thread waits for database, others can work

- Network servers, API gateways

-

Memory is constrained

- 10,000 threads use less memory than 10,000 processes

- Embedded systems, mobile apps

-

Low-latency communication needed

- Shared memory access is nanoseconds

- IPC is microseconds to milliseconds

Trade-off cost: Harder debugging, risk of race conditions, crash affects all threads

The Hybrid Approach (Most Production Systems)

Most real systems use both:

┌────────────────────────────────────────────────────────────┐

│ Production Architecture │

├────────────────────────────────────────────────────────────┤

│ │

│ ┌───────────────┐ ┌───────────────┐ ┌───────────────┐ │

│ │ Process 1 │ │ Process 2 │ │ Process 3 │ │

│ │ (Worker) │ │ (Worker) │ │ (Worker) │ │

│ │ ┌───────────┐ │ │ ┌───────────┐ │ │ ┌───────────┐ │ │

│ │ │ Thread 1 │ │ │ │ Thread 1 │ │ │ │ Thread 1 │ │ │

│ │ │ Thread 2 │ │ │ │ Thread 2 │ │ │ │ Thread 2 │ │ │

│ │ │ Thread 3 │ │ │ │ Thread 3 │ │ │ │ Thread 3 │ │ │

│ │ └───────────┘ │ │ └───────────┘ │ │ └───────────┘ │ │

│ └───────────────┘ └───────────────┘ └───────────────┘ │

│ │ │ │ │

│ └──────────────────┼──────────────────┘ │

│ │ │

│ ┌─────┴─────┐ │

│ │ Load │ │

│ │ Balancer │ │

│ └───────────┘ │

└────────────────────────────────────────────────────────────┘

Example: Gunicorn with workers and threads

gunicorn --workers=4 --threads=2 app:app

= 4 processes × 2 threads = 8 concurrent handlers

Why this works:

- Process isolation: One worker crash doesn't kill all

- Thread efficiency: Share connection pools within worker

- Scale: Add more workers as load increases

Code Examples

Demonstrating Thread Shared Memory (and Bugs!)

import threading

import time

# Shared variable - threads can all see and modify this

shared_counter = 0

def thread_worker():

global shared_counter

for _ in range(100000):

shared_counter += 1 # NOT atomic! Read-modify-write

# This is actually:

# 1. Read shared_counter (e.g., 42)

# 2. Add 1 (43)

# 3. Write back (43)

# Another thread might read 42 between steps 1 and 3!

# Create 2 threads

threads = [threading.Thread(target=thread_worker) for _ in range(2)]

# Start them

for t in threads:

t.start()

# Wait for completion

for t in threads:

t.join()

# Expected: 200000 (100000 × 2)

# Actual: Something less, like 134672 (race condition!)

print(f"Expected: 200000, Got: {shared_counter}")

Fixing with Proper Synchronization

import threading

shared_counter = 0

lock = threading.Lock()

def thread_worker_safe():

global shared_counter

for _ in range(100000):

with lock: # Only one thread can hold the lock

shared_counter += 1

threads = [threading.Thread(target=thread_worker_safe) for _ in range(2)]

for t in threads:

t.start()

for t in threads:

t.join()

print(f"Expected: 200000, Got: {shared_counter}") # Now correct!

Process Isolation Demo

import multiprocessing

import os

def process_worker(name):

# Each process has its own memory space

local_counter = 0

for _ in range(100000):

local_counter += 1

print(f"Process {name} (PID: {os.getpid()}): counter = {local_counter}")

# No race condition possible - each process has its own counter!

# Create 2 processes

processes = [

multiprocessing.Process(target=process_worker, args=(i,))

for i in range(2)

]

for p in processes:

p.start()

for p in processes:

p.join()

# Each prints 100000 - no interference

Real-World Trade-off Stories

Instagram: GIL Forced the Decision

Situation: Instagram's Django app wasn't scaling with threads due to Python's GIL.

Decision: Move to multi-process model with Gunicorn workers.

Trade-off accepted: Higher memory usage (each worker loads Django), but actual parallel execution.

Result: Linear scaling with added workers. They went from struggling with 100 concurrent users to handling thousands.

Lesson: Know your runtime's limitations. Python threads don't parallelize CPU work.

Chrome: Isolation Over Efficiency

Situation: Early browsers used single-process, multi-threaded architecture. One bad tab could crash the entire browser.

Decision: Chrome pioneered process-per-tab architecture.

Trade-offs accepted:

- ~50MB extra memory per tab

- IPC overhead for communication between tabs

- More complex architecture

What they gained:

- Security: Malicious sites can't access other tabs' memory

- Stability: One tab crashing doesn't kill others

- Performance: Tabs can use multiple CPU cores

Lesson: Sometimes isolation is worth the resource cost.

Discord: The Elixir/Erlang Choice

Situation: Discord needed to handle millions of concurrent connections with high reliability.

Decision: Used Elixir (running on Erlang VM), which uses lightweight processes (actors).

Why this worked:

- Erlang processes are ~2KB each (not OS processes)

- Isolation: One process crashing doesn't affect others

- Built-in supervision trees restart failed processes

- Message passing prevents shared state bugs

Lesson: Sometimes the runtime/language choice determines your concurrency model.

AI Model Serving: Why Processes Win

Situation: You're serving multiple ML models (image classification, text generation, etc.). One model crashes or uses too much GPU memory.

Decision: Run each model in a separate process.

Why processes:

- Isolation: One model's crash doesn't kill others

- Resource limits: Set memory limits per process (e.g., 4GB per model)

- Security: Untrusted user code runs in isolated process

- Scaling: Easy to scale models independently

Example architecture:

┌─────────────────────────────────────────┐

│ API Gateway (Process 1) │

│ └─ Routes to model processes │

├─────────────────────────────────────────┤

│ Image Model (Process 2) │

│ └─ 4GB memory limit │

│ └─ Thread pool for batch requests │

├─────────────────────────────────────────┤

│ Text Model (Process 3) │

│ └─ 8GB memory limit │

│ └─ Thread pool for batch requests │

└─────────────────────────────────────────┘

Trade-off accepted:

- Higher memory overhead (each process loads the model)

- IPC overhead for routing requests

- More complex deployment

What you gain:

- One model OOM doesn't kill others

- Can set per-model resource limits

- Can scale models independently

- Better security boundaries

Lesson: In AI systems, isolation often matters more than efficiency. One bad model shouldn't take down your entire service.

Common Confusions (Cleared Up)

"But I use async/await, not threads!"

Reality: Async/await is still using threads (or a single thread with an event loop). The difference:

- Threads: OS manages switching between threads (preemptive multitasking)

- Async: Your code explicitly yields control (cooperative multitasking)

Both still need to understand process vs thread isolation! Async doesn't eliminate the need to understand these concepts.

"Go uses goroutines, not threads!"

Reality: Goroutines are lightweight threads managed by Go's runtime. They're still threads, just more efficient (Go runtime multiplexes many goroutines onto fewer OS threads). The process/thread distinction still applies—goroutines within a process share memory.

"Docker containers are processes, right?"

Reality: Yes! Each container is essentially a process (or a group of processes). Understanding process isolation helps you understand container isolation. Containers add filesystem and network isolation on top of process isolation.

"Node.js is single-threaded, so this doesn't apply!"

Reality: Node.js uses a single main thread, but:

- It uses a thread pool for I/O operations (libuv)

- Worker threads exist for CPU-intensive work

- Understanding processes vs threads helps you decide when to use worker threads vs worker processes

Try It Yourself (30 seconds each)

See Processes on Your System

# See all processes

ps aux | head -20

# See YOUR processes

ps aux | grep $USER | head -10

# See process tree (parent-child relationships)

pstree -p $$ | head -20

See Threads Within a Process

# See threads (LWP = Light Weight Process = thread)

ps -eLf | head -20

# See threads of a specific process (e.g., your shell)

ps -T -p $$

# Watch thread count of Python in real-time

# First, run a Python script, then:

watch -n 1 "ps -o pid,nlwp,cmd -p \$(pgrep python | head -1)"

Monitor Context Switches

# See context switches per second

vmstat 1 5

# Look at 'cs' column - higher = more switching = potential overhead

Common Mistakes and How to Avoid Them

Mistake #1: "Threads Are Always Faster"

Why it's wrong:

- Context switching has cost (~1-10μs)

- Cache invalidation hurts performance

- Lock contention can make threads slower than single-threaded

Right approach:

- Benchmark your specific workload

- For I/O-bound: threads help

- For CPU-bound with shared state: often slower due to locking

Mistake #2: "Shared Memory Is Free"

Why it's wrong:

- Requires synchronization (locks, atomics)

- Lock contention becomes bottleneck

- Debugging is much harder

Right approach:

- Prefer message passing when possible

- Minimize shared state

- Use immutable data structures

Mistake #3: "More Threads = More Speed"

Why it's wrong:

- Beyond CPU core count, you just add context-switching overhead

- Each thread consumes memory (stack space)

- Lock contention increases with thread count

Right approach:

- Rule of thumb: threads ≈ CPU cores for CPU-bound work

- For I/O-bound: threads ≈ concurrent I/O operations needed

- Measure, don't guess

Decision Checklist

Use this checklist when deciding between processes and threads:

□ Do I need crash isolation?

→ Yes: Consider processes

→ No: Threads are fine

□ Do I need to share large amounts of data?

→ Yes: Threads (shared memory) are simpler

→ No: Processes with message passing

□ Is my workload CPU-bound or I/O-bound?

→ CPU-bound in Python/Ruby: Processes (GIL)

→ CPU-bound in Go/Java/C++: Either works

→ I/O-bound: Threads (or async I/O)

□ How many concurrent operations do I need?

→ <100: Either works, choose simpler option

→ 100-10,000: Threads are more memory-efficient

→ >10,000: Consider async I/O (epoll/io_uring)

□ What's my team's expertise?

→ Multi-threading is harder to debug

→ Multi-process is easier to reason about

Memory Trick

"TIPS" for choosing:

- Threads: Share memory, need synchronization

- Isolation: Processes give you crash protection

- Performance: Threads for I/O, processes for CPU

- Simplicity: Processes are easier to reason about

Self-Assessment

Before moving to the next article, make sure you can:

- [ ] Explain why threads share heap but have separate stacks

- [ ] Identify a race condition in code

- [ ] Know when to use

multiprocessingvsthreadingin Python - [ ] Explain why Chrome uses process-per-tab

- [ ] Draw the memory model diagram from memory

- [ ] Explain what GIL is and why it matters for Python

- [ ] Know when to use processes vs threads for AI/ML model serving

Key Takeaways

- Threads share memory - fast communication but requires synchronization

- Processes are isolated - safer but higher overhead

- Most production systems use both - processes for isolation, threads for efficiency within each process

- Know your runtime - Python GIL, Erlang actors, Go goroutines all behave differently

- Measure, don't assume - "threads are faster" is often wrong

- Isolation often beats efficiency - especially in AI/ML systems where one model failure shouldn't kill others

What's Next

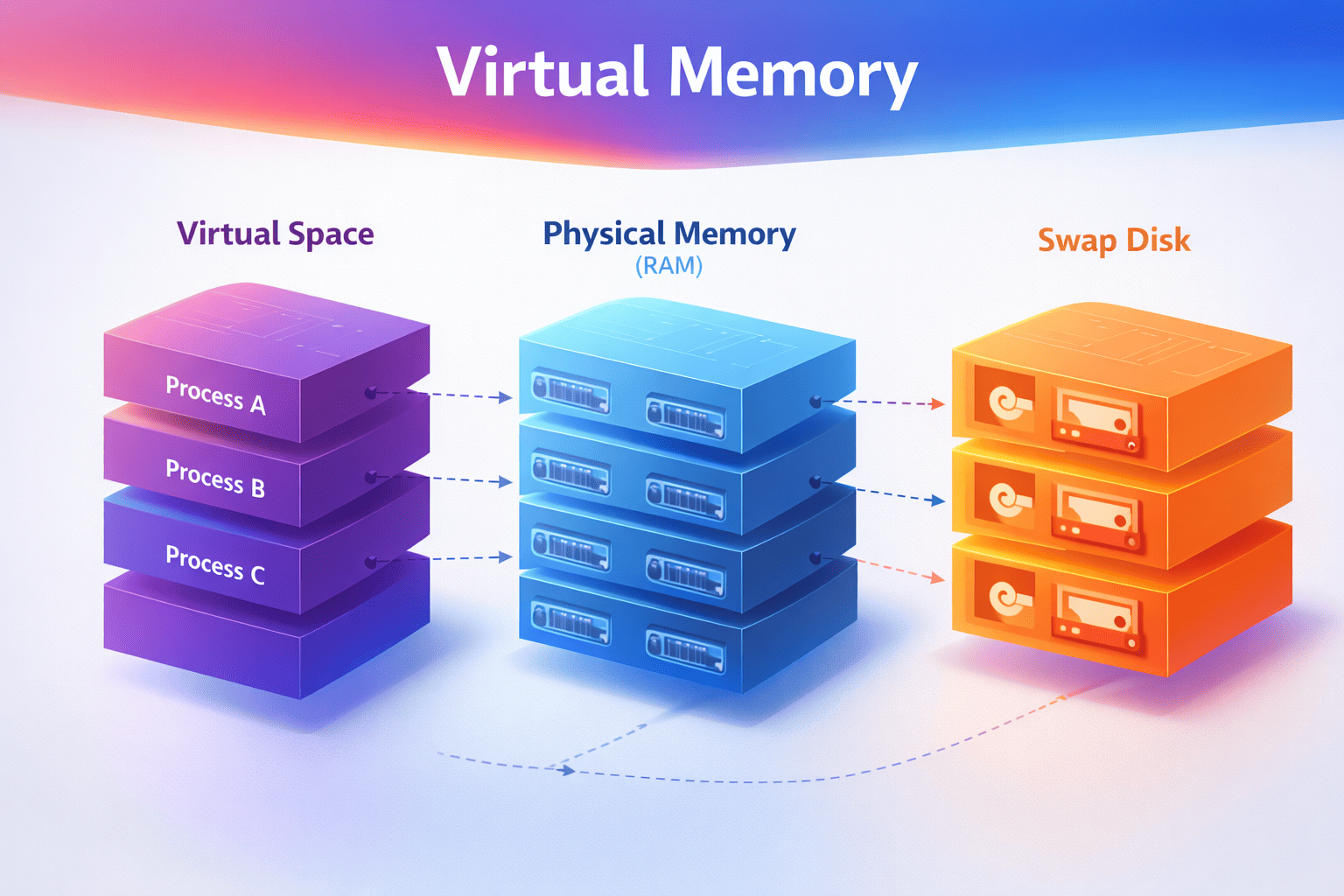

Now that you understand how processes and threads work, the next question is: How does the OS actually manage memory for all these processes?

In the next article, Article 2: Memory Management Demystified - Virtual Memory, Page Faults & Performance, you'll learn:

- Why a "2GB process" might only use 100MB of actual RAM

- How virtual memory creates the illusion of infinite memory

- When page faults kill your performance (and how to avoid them)

- Why adding more RAM doesn't always help

This builds directly on what you learned here—each process gets its own virtual address space, but how does the OS juggle all that physical RAM? Let's find out.

→ Continue to Article 2: Memory Management

This article is part of the Backend Engineering Mastery series. Follow along for comprehensive guides on building robust, scalable systems.